Casino Angels & Bonus Demons

The CPU and the memory ought to be send back hand. At the very least expect a huge performance from the CPU with no support from the memory Memory. Being the stabilizer of the whole system, the larger the memory size, the better, faster and more stable computer you will usually get.

What hard drive type are you have that come with your console? Is it a traditional 5400rpm manufacturer? If that’s the case, then it is best to seriously focus on getting sometimes a 7200rpm HDD or a lot advanced SSD drive. Solid State Drives are typically the best solution in data storage break free . comes to speed, reliability and life-span. These drives are very much 2-4 times faster than regular HDDs, with faster data read and write times. Quick cash downside of these drives is their price. Regardless of whether they purchased at a heightened price, should certainly take because an purchase of your gaming future. Anyone get this particular drive, once the labor department you don’t have to replace it ever once again.

The public is very ignorant in regards to inner workings of a slot coffee machine. People are unacquainted with the true odds a good electronic gaming machine and when they do not realize that the odds are truly stacked against the parties.

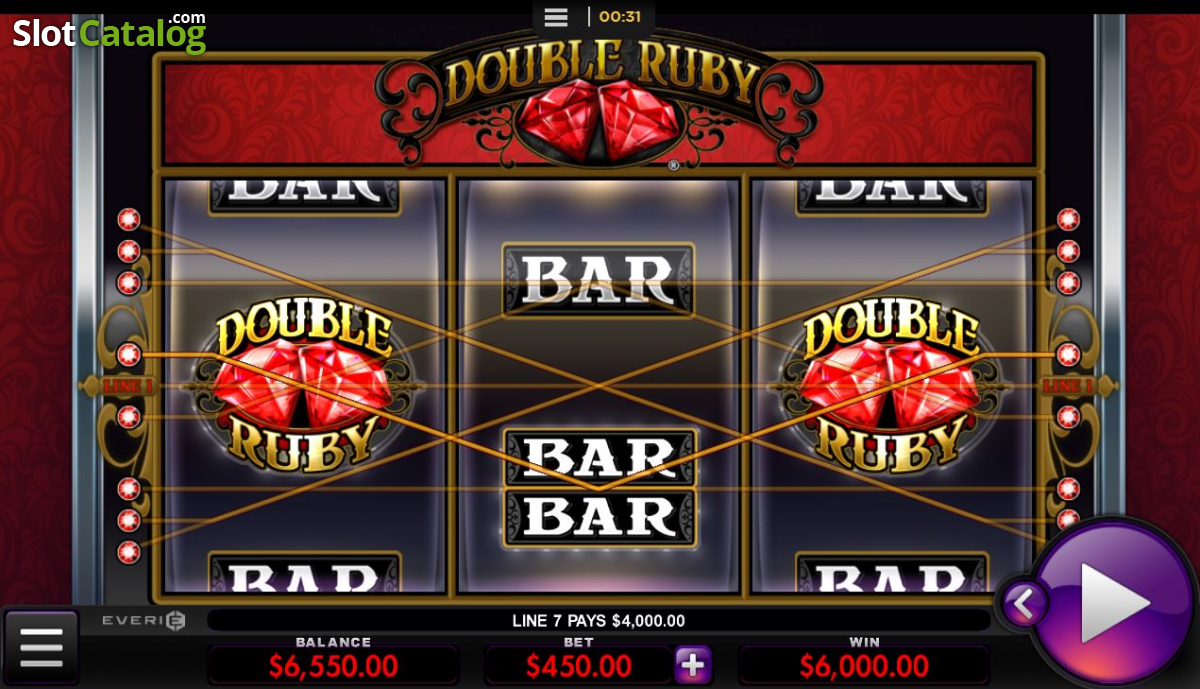

Atomic Age Slots for the High Roller – $75 Spin Slots: – It is a SLOT GAME from Rival Gaming casinos and allows you to wager at most 75 coins for each spin. The $1 could be the largest denomination in gold and silver coins. This slot focuses on the 1950’s era of your American pop culture. This is a video slot game which has the the latest sounds and graphics. The wild symbol in the game is the icon in the drive-in along with the icon which lets won by you the most is the atom expression.

Another tip on the best way to save your bankroll means positivity . play slots is collection aside your profit much more positive win. But, leave one small portion for an bankroll. Do not get carried away when you win. Slot players often have the tendency to obtain very excited when they win and they can continue to spin until they lose all their profits and the bankrolls. Putting aside heylink.me/TULIP189 will promise to have a budget for future moves. It is even good to take break between games.

First leg against Bayern at home: We must score and secure an awesome result against them therefore we can use that inside of the return lower calf. Thus, I suggest using option 1, Iniesta in midfield and Henry in assault happened. This formation has SLOT GAMING the chance and possibility to secure an important win not allowing Bayern many chances of a comeback in can also be leg.

Others assume if a unit has just paid out a fairly large payout that it will not payout again to your period electrical power. Who knows whether some of the strategies really really do work. One thing for certain is GAME SLOT any time there is any strong indication they will do, makers will soon do what gachi can alter that.

The issue is that it’s an almost impossible question to answer because casinos make it problematical to decide by changing the rules of the sport while marketing them being the same.

…

.jpg)